Many owners of websites do not fully understand how web crawlers work when it comes to search engine optimization (SEO). They try to use specific keywords to improve their rankings in hopes of at least being on the first page. Understanding what robots.txt is will help owners of websites and their webmasters (even if the owner and the webmaster are one and the same person).

We all know that web robots such as Googlebot and others crawl the web and websites looking for information that has to do with Search Engine Optimization. The robots.txt file is a protocol known as the robots exclusion protocol or REP. It tells the web crawler which sections of the website are open for crawling and which sections are not open for crawling.

A little history on this protocol would be helpful. Once you understand the history behind it this will help you understand the robots txt format and how to create robots txt for your own website. It really is not that difficult to understand.

The genesis of robots.txt dates back to 1994 when it was created by Martijn Koster who at the time was working for a company called Nexor. Kosters server was apparently attacked by a web-crawler created by Charles Stross. The story is that Martin Koster wrote this protocol in response to that attack and it quickly became in majority use in the internet web crawling protocol search engines.

You can see that the reason for the protocol was to stop malicious web robots from checking out a website and doing damage. It should be noted that most non-malicious robots will look at the robots txt format to see if any of the websites is open for them to check in on. When they see the proper commands from the robots.txt file they know which if any of the websites is allowed and which are disallowed.

However, it is not a guarantee. It is a sign post. It is a sign that says either “enter or do not enter”. Somewhat of an honor system! However, since it is not a virus security system, just know and understand that robots.txt syntax is not truly a locked entrance way, it is just a sign post.

Syntax of Robots

The syntax of the robots.txt format is initially simple and is not that hard to implement. You will begin to understand how it works now. Keep in mind, the way you use this can help or hurt your SEO rankings as you will be telling the Web crawlers what they can look at and what they cannot look at.

A user-agent is a software program that acts on behalf of the user. This is important to understand the use of the robots.txt syntax.

The basic format is as follows:

- This format allows full access to the website:

User-agent: *

Disallow:

- This format blocks all access:

User-agent: *

Disallow: /

- If you want to block one category it would look like this:

User-agent: *

Disallow: /category/

- If you want to block one page it would look like this:

User-agent: *

Disallow: /page/

This is the very basic of how search engine spiders operate. It is the way that they will interact with your website and is essentially how search engines work. So at the very outset here, you should understand that the way you use robots.txt format and how you create robots txt for your website has a direct bearing on your rankings as well.

You can have all the keywords needed for higher rankings, but if you tell the search crawlers or spiders to stay out of where the keywords are, then you will not be in the rankings. Just know that you are giving simple instructions on what you want to have browsed or not. So let’s go into this a little more.

As you can see, the disallow instruction lets the webmaster not allow certain pages, categories or files to be entered into by the web crawlers or spiders.

There is also an allow instruction that allows robots like Googlebot in where it has disallowed other ones. It looks like this:

User-agent: *

Disallow:/news/

Allow: /news/USA

This tells Googlebot that it can go into a certain page within a category.

Once you start to understand and work with how to create a robots.txt file, you will be able to control your website and the purpose of your website much better.

How to create it and add it to site

Before you decide to add robots.txt to your website, you may need to ask yourself if you need the robots.txt file to begin with. If it is not there, then your site is free to be searched by the web robots. So the question needs to be answered by you, to begin with. You can search the web for answers to this question and get a few more detailed reasons to use or not use the robots.txt file. We will try to codify succinctly reasons why you may need to implement and reasons not to implement.

Use of the robots.txt protocol is recommended because the web robots are looking for it anyway. So why not give them instructions? Secondly, if it is not there, you may see in your files the 404 error from robots that cannot see you robots.txt file because it is not there. Thirdly, you can control your website as to which content, folders, and files you want the robots to see and which ones you do not.

So there you have it. A very brief reason to use the robots.txt file in your website! All other explanations are just added reasons that are somewhat immaterial.

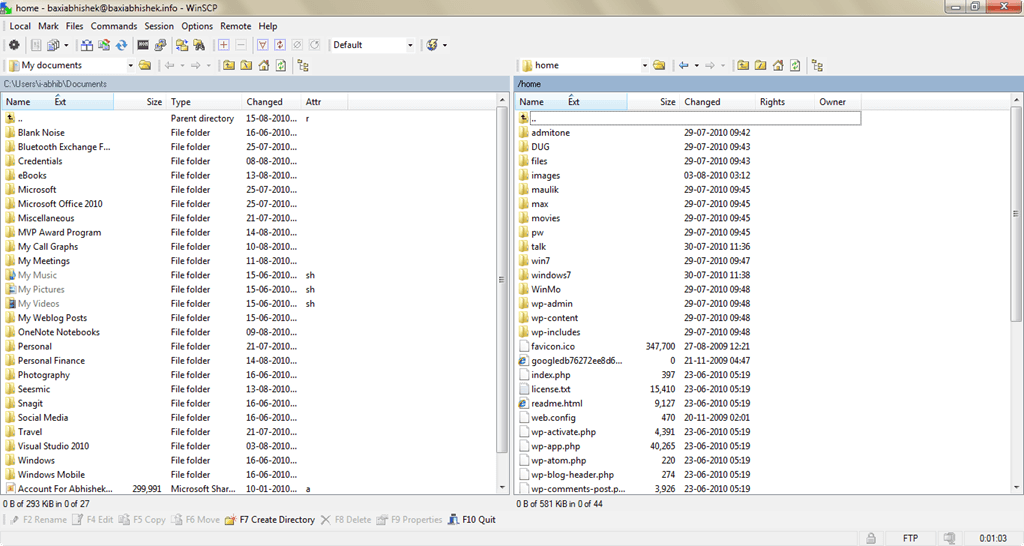

To create a robots.txt file for the proper use you will need to place it in your website root directory. Once you do that you will be able to control how the web crawlers index your site for proper SEO.

To create this robots.txt file we will use WordPress as an example since many blogging and membership websites use WordPress. You will connect to your site using your cPanel or your FTP Client. You will simply create the robots.txt file using notepad or similar to create a simple text file. Then you upload it to your root directory.

It can look like noted above. Here is an example:

User-agent *

Disallow: /wp-content/plugins

Disallow:/wp-content/themes

Allow:/wp-content/uploads

In this example, we have allowed all web robots into our site. We then did not allow them to index our plugins or our themes, however, they were allowed to index our uploads!

WordPress has an SEO plugin that will generate your XML sitemap and will automatically add it to your robots.txt file. A more detailed explanation about Sitemap can be found in our article.

Robots.txt Examples

If a website has a robots.txt file you can find it by using the following:

site.com/robots.txt

So you can do this to check to see if your website already has robots.txt file and what it is instructing the robots who crawl the internet indexing websites. You can easily do this to anyone’s site to see what they are allowing or disallowing.

If you simply go to www.google.com/robots.txt you will see a rather large set of instructions allowing and disallowing. For most of the well-known sites, you will see rather large robots.txt files. You can see the same for Amazon.

So to see an example how we did Robots.txt file. It went like this:

https://scanbacklinks.com/robots.txt

User-agent: EtaoSpider Disallow: / User-agent: Nutch Disallow: / User-agent: larbin Disallow: / User-agent: heritrix Disallow: / User-agent: ia_archiver Disallow: / User-agent: * Disallow: /admin* Disallow: /go/ Disallow: /*?url=* Disallow: */contact* Disallow: */contact_us_thanks* Disallow: */sb-rank-result* Disallow: */dapa-result* Disallow: */sb-rank-result* Disallow: */tos* Disallow: */libre-forms/* Disallow: */keywordreport/* Disallow: */imagealtreport/* Disallow: */brokenlinkreport/* Disallow: */export-csv/* Disallow: */noresult* Sitemap: https://scanbacklinks.com/sitemap.xml

You can see if you actually went to Amazon or Google’s robot.txt file that ScanBacklinks has a less complicated format. Nevertheless you can see that ScanBacklinks disallow indexing of our site some robots like:

User-agent: EtaoSpider - Major China Search Engine

Disallow: /

User-agent: Nutch - Nutch crawler

Disallow: /

User-agent: larbin - Larbin crawler

Disallow: /

User-agent: heritrix - Heritrix crawler designed for web archiving

Disallow: /

User-agent: ia_archiver - Alexa Crawler

Disallow: /

The sitemap helps website owners for the SEO as they would want their articles high in the rankings.

Robots.txt file tricks

We have so far covered some of the most basic issues regarding robots.txt syntax. The Allow feature was not in the original protocol but it is now something that most of the robot crawlers understand. Your use of the disallow and allow in your robots.txt file is a trick in itself.

For example, you could disallow and entire folder and allow one file in the folder. It may look something like this:

User-agent: *

Disallow: /news/

Allow: /news/USA

This disallows all web crawlers from everything in that particular category except for the one page.

Regular expressions: for more flexible customization of your Robots.txt you can use 2 symbols

* (Asterisk) - means any sequence of characters

$ (Dollar sign) - indicates the end of the line

The prohibition on indexing files of a certain type

User-agent: *

Disallow: /*.pdf$

You can also use the delay instruction. This will slow down the indexing of many of the web crawlers to a certain number of pages per day. It would look like this:

Crawl-delay: 10

This tells the robot to wait after indexing of allowed pages to 10 seconds. This is useful in smaller sites to save some bandwidth. However, the larger the website it is not as useful.

When you have XML sitemaps it is a good idea to add that to your robots.txt file. It tells search engines such as Google, Bing, and Yandex the location of your sitemaps. That is exactly what ScanBaclinks did in our example above.

For more detailed explanation of the use of robots.txt files, you can also go to Controlling Crawling and Indexing. This is a helpful site by Google for Webmasters which of course you are a webmaster if you are managing your own WordPress site.

Got questions? Just write them in comments below!

We trust that this explanation has been helpful to you as you learn and implement the use of robots.txt files into your website, especially your WordPress website. You can also check out the links we have provided in this article for more information.

If you have any questions you may add them in the comments below and we will do our best to answer them.